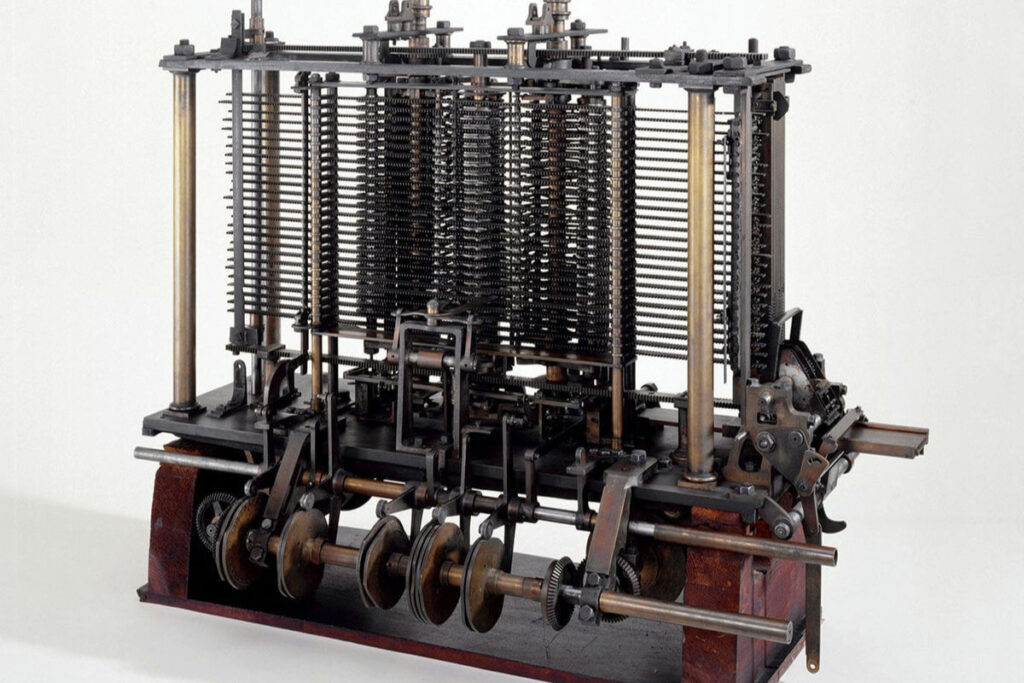

1. The Analytical Engine’s Blueprint

In the 1830s, English polymath Charles Babbage conceptualized the Analytical Engine, a design considered the theoretical ancestor of the modern computer. While it was never fully constructed in his lifetime, Babbage’s design included key features like an arithmetic logic unit, conditional branching, and integrated memory, fundamentally outlining the structure of a general-purpose programmable machine. This visionary work established the foundational logic that subsequent generations of computer scientists would build upon, proving that complex calculations could be mechanized far beyond simple arithmetic.

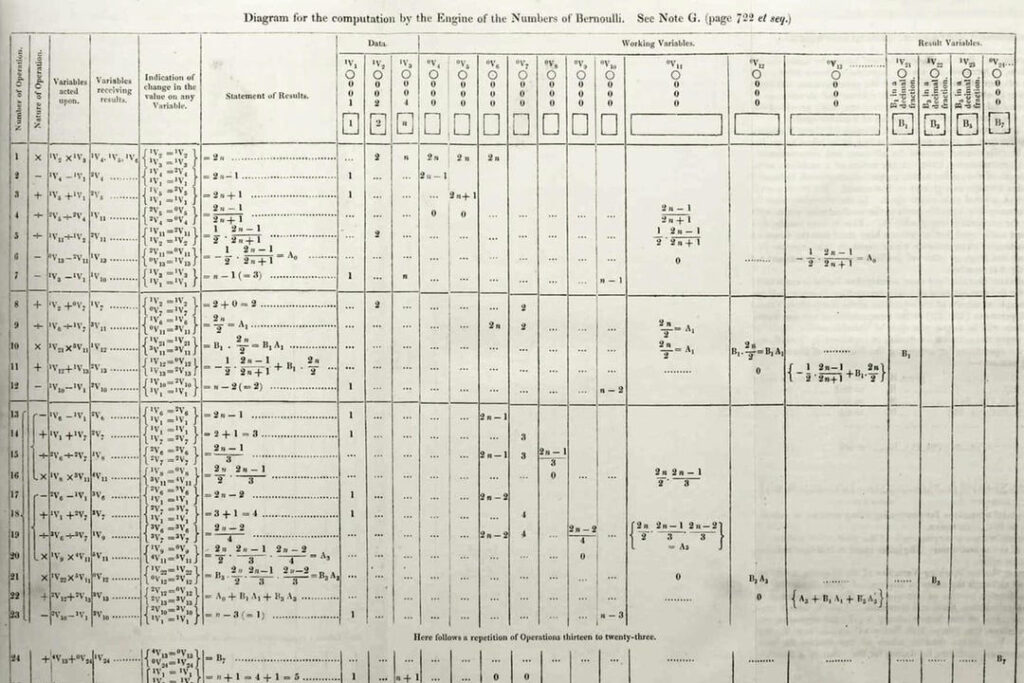

2. The First Programmer’s Algorithm

Ada Lovelace, working alongside Babbage, recognized the Analytical Engine’s potential extended beyond pure number-crunching. In 1843, she published notes that included an algorithm, a step-by-step set of operations, intended to be carried out by the machine to calculate Bernoulli numbers. This work is widely regarded as the world’s first published computer program, establishing Lovelace as the first computer programmer and introducing the revolutionary concept that a machine could manipulate symbols and data, not just numbers.

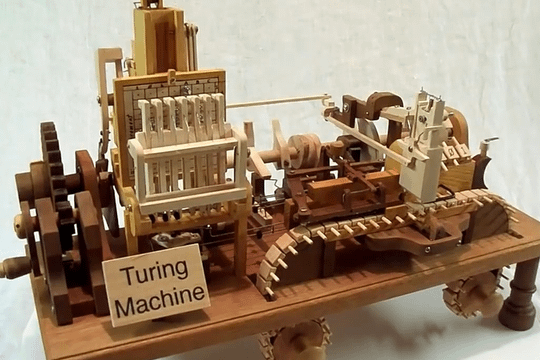

3. The Turing Machine Concept

In 1936, mathematician Alan Turing introduced the concept of the “Turing machine,” a theoretical device capable of simulating any algorithm, defining what it means for something to be “computable.” This abstract model provided the essential mathematical foundation for all future digital computers and modern computing theory. Turing’s work demonstrated that a single machine could, in principle, perform the functions of any other machine, cementing the idea of a universal digital computer and laying the intellectual groundwork for the entire digital revolution.

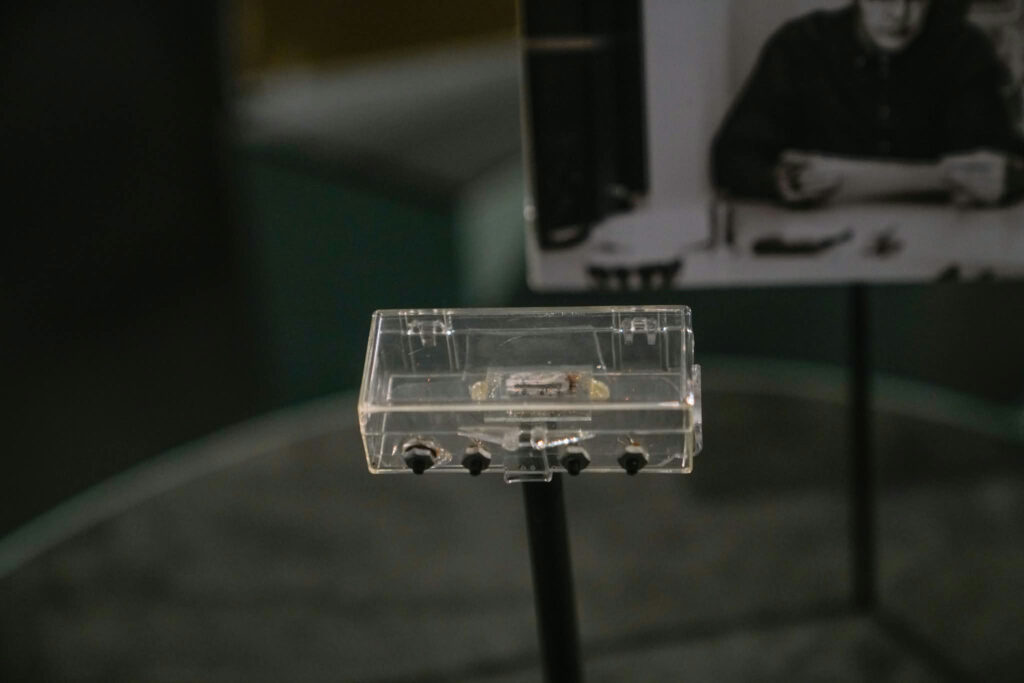

4. The Transistor’s Miniature Revolution

The invention of the transistor at Bell Labs in 1947 by John Bardeen, Walter Brattain, and William Shockley was a watershed moment, replacing the bulky, hot, and unreliable vacuum tubes of early computers. Transistors were smaller, consumed less power, were more reliable, and could switch on and off much faster. This pivotal component’s introduction marked the beginning of the second generation of computers and enabled the crucial miniaturization and increase in processing speed necessary for computers to move out of climate-controlled labs and into the commercial sphere.

5. The Integrated Circuit’s Birth

In 1958, Jack Kilby at Texas Instruments invented the integrated circuit (IC), or microchip, by fabricating multiple transistors onto a single, small piece of semiconductor material. This breakthrough solved the problem of connecting a vast number of individual components and dramatically reduced the size and cost of electronic circuits. The IC made it possible to create highly complex and powerful computers that were still affordable and compact, directly leading to the development of the microprocessor and paving the way for the personal computer.

6. The Commercial Computer Arrives (UNIVAC I)

The UNIVAC I (Universal Automatic Computer I), delivered in 1951, was the first computer designed and sold for commercial use, moving computing from military and academic laboratories into the business world. Its first non-governmental customer was the U.S. Census Bureau, but its public fame came when it accurately predicted the result of the 1952 presidential election on live television, astonishing the public. This event introduced the notion that computers could be powerful tools for data processing, analysis, and forecasting outside of purely scientific applications.

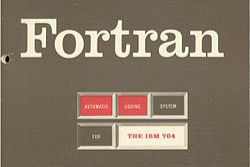

7. Birth of Modern Programming Languages (FORTRAN)

Developed by a team at IBM led by John Backus, FORTRAN (Formula Translation) was released in 1957 as the first widely used high-level programming language. Unlike previous languages that required tedious, machine-specific instructions, FORTRAN allowed scientists and engineers to write programs using a syntax much closer to human language and mathematical notation. This abstraction made programming more accessible and efficient, accelerating software development and making computer applications practical for a broader range of complex, real-world problems.

8. The Mouse and GUI’s Debut

In a groundbreaking 1968 demonstration, Douglas Engelbart unveiled a comprehensive system that included the computer mouse, on-screen windows, hyper-text linking, and collaborative work tools. This event, now legendary as “The Mother of All Demos,” previewed the future of personal computing with a Graphical User Interface (GUI), making computers intuitive for the average user. Engelbart showed that computing could be an interactive, personal, and profoundly collaborative tool, shifting the focus from calculation to communication and information management.

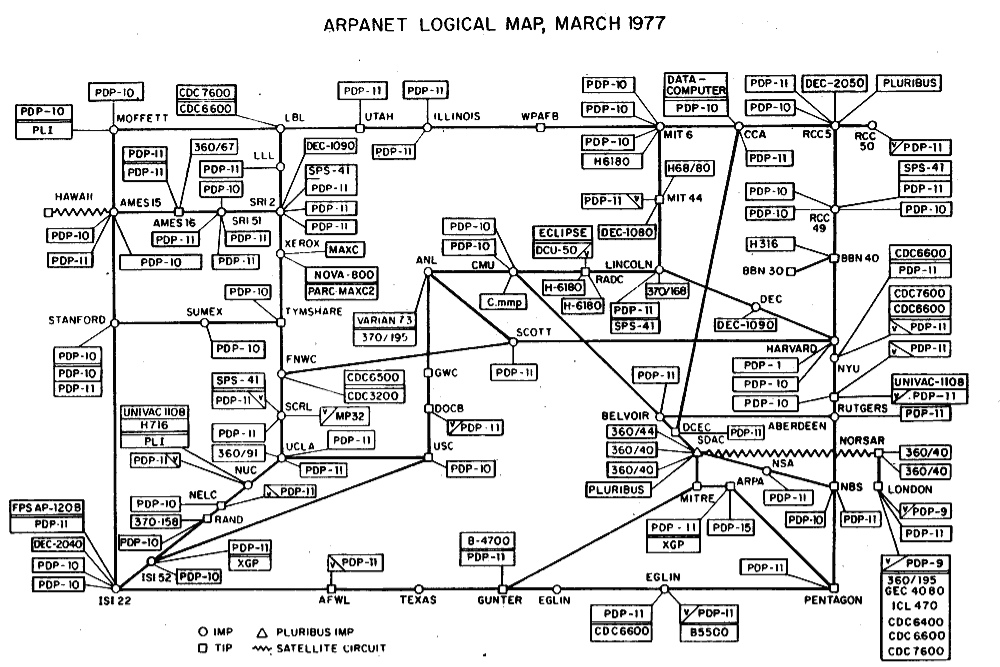

9. ARPANET’s First Connection

In 1969, the ARPANET, the precursor to the modern internet, achieved its first host-to-host connection between UCLA and the Stanford Research Institute. It successfully used packet switching, a revolutionary method that breaks data into small blocks to be sent over different paths and reassembled at the destination. Initially a research tool for the U.S. Department of Defense’s Advanced Research Projects Agency, this network established the core networking protocols and infrastructure that would eventually scale up to become the interconnected global internet.

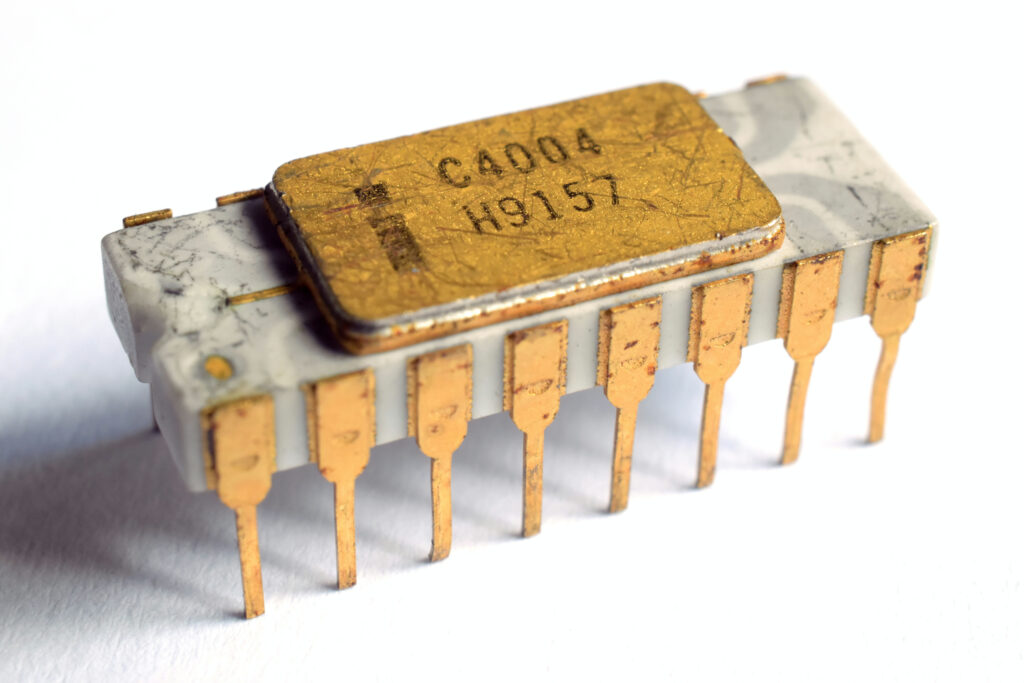

10. The First Microprocessor (Intel 4004)

Intel introduced the 4004 microprocessor in 1971, which was the first integrated circuit to contain all the processing elements of a computer’s central processing unit on a single, tiny chip. Designed initially for a Japanese calculator company, its arrival dramatically reduced the size and cost of computer processing. This single chip essentially defined the path to miniaturized, mass-producible, and affordable computers, truly igniting the trajectory toward what would become the personal computer revolution of the late 1970s.

11. Ethernet’s Local Network Standard

In 1973, Robert Metcalfe, while working at Xerox’s Palo Alto Research Center (PARC), wrote a memo describing the Ethernet network system to connect multiple computers and peripherals locally. This development established a highly efficient, fast, and relatively inexpensive way for devices in a shared physical space to communicate and share resources, such as printers and files. Ethernet quickly became the de facto standard for Local Area Networks (LANs) and remains the core technology powering wired corporate and home networks today.

12. The Killer App for Business (VisiCalc)

Released in 1979, VisiCalc was the first electronic spreadsheet program for personal computers, specifically the Apple II. Before VisiCalc, the idea of owning a personal computer for business was largely dismissed, but the power of instant recalculation transformed complex financial planning. It became the “killer app” that demonstrated the immense business value of the personal computer, convincing many companies and individuals to purchase a PC solely to run the software, thus accelerating the market’s growth exponentially.

13. The IBM PC Standardized Computing

IBM’s introduction of the IBM Personal Computer (Model 5150) in 1981 legitimized the personal computer in the corporate world. Unlike earlier hobbyist machines, the IBM PC was seen as a serious business tool, leading to massive adoption. Crucially, IBM relied on an “open architecture,” using components and software from outside vendors like Microsoft (for the operating system, MS-DOS) and Intel (for the microprocessor). This decision fostered a massive, standardized ecosystem of compatible software and hardware, cementing the PC’s dominance.

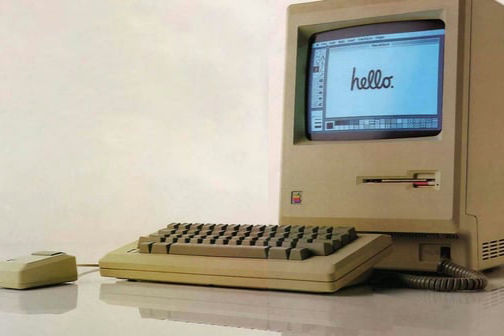

14. The User-Friendly Macintosh

In 1984, Apple introduced the Macintosh, the first commercially successful computer to feature a mouse and a graphical user interface (GUI), making computing accessible and enjoyable for the non-technical user. Its “plug-and-play” simplicity and intuitive desktop metaphor were a radical departure from the text-based command line interfaces common at the time. The Macintosh brought to the masses the vision of interactive, approachable computing first demonstrated by Engelbart, focusing on a visually driven experience.

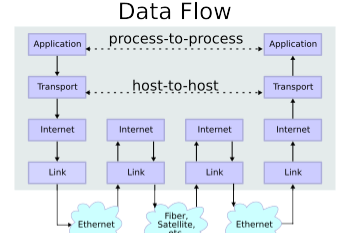

15. TCP/IP Becomes the Standard

In 1983, the U.S. Department of Defense officially mandated that all computers connected to ARPANET use the Transmission Control Protocol/Internet Protocol (TCP/IP), the set of rules that governs how data is exchanged over the internet. This standardization was critical, establishing a universally accepted, non-proprietary language that allowed diverse, independent networks to reliably communicate with one another. TCP/IP’s adoption was the single most important technical step in transitioning ARPANET into the truly global, interconnected Internet.

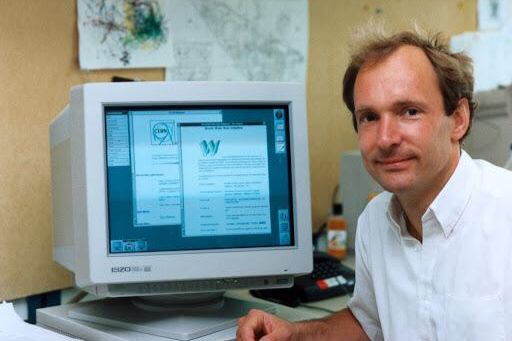

16. The World Wide Web is Born

In 1989, Sir Tim Berners-Lee, a scientist at CERN, proposed a system for information management that utilized hypertext to link documents across a network. This proposal evolved into the World Wide Web, which, alongside the introduction of the first web browser in 1990, transformed the Internet from a research network into a user-friendly, vast public space. The Web made information sharing easy and created a platform for the explosion of e-commerce, media, and social interaction that followed.

17. Google Reinvents Search

Founded in 1998 by Stanford Ph.D. students Larry Page and Sergey Brin, the search engine Google introduced the PageRank algorithm, which prioritized search results based on the number and quality of links pointing to a website. This approach delivered far more relevant search results than its competitors. Google quickly became the dominant tool for navigating the rapidly expanding World Wide Web, effectively organizing the world’s information and becoming the primary gateway for billions of users accessing the internet.

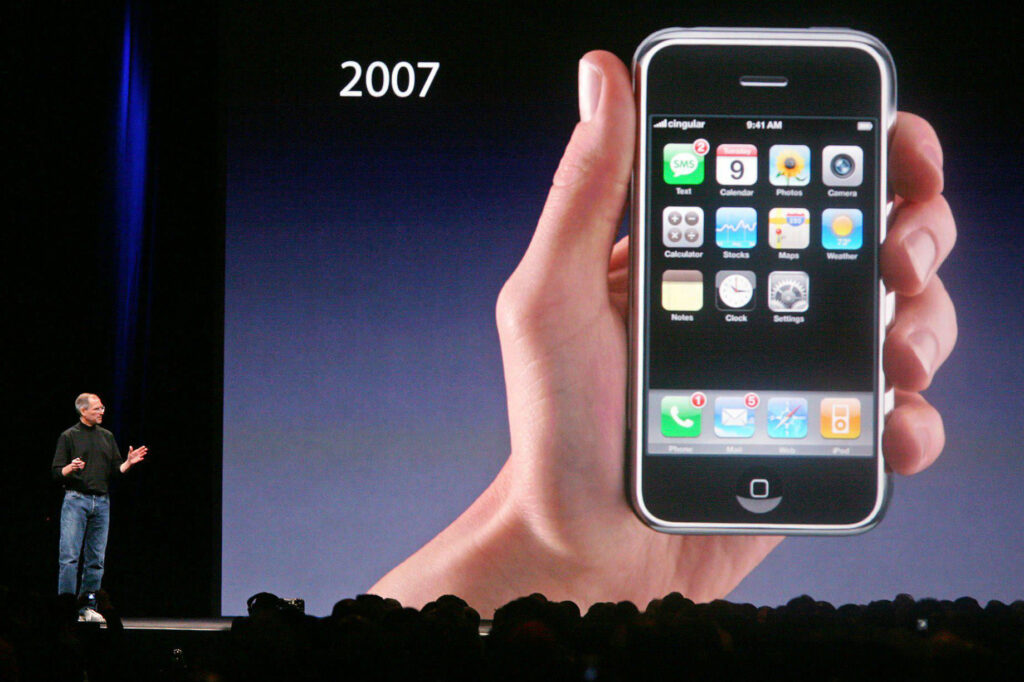

18. The Smartphone Convergence (iPhone)

The 2007 release of the Apple iPhone marked a profound shift by converging a mobile phone, an iPod, and an internet communicator into a single device with a multi-touch interface. It popularized mobile internet access, ushering in the post-PC era where computing was no longer desk-bound. The iPhone and its Android competitors democratized powerful computing, placing sophisticated applications, vast information, and global connectivity literally into the hands of billions, cementing computers’ absolute dominance in daily personal life.

This list confirms that “The Year Computers Took Over the World” wasn’t a single year, but a continuous series of brilliant, incremental steps and sudden, transformative leaps. From Charles Babbage’s theoretical designs in the 1830s to the pocket-sized supercomputers we carry today, each milestone built upon the last, weaving digital technology irrevocably into the fabric of human life. The story continues to unfold, ensuring the digital age remains the age of endless possibility.

Like this story? Add your thoughts in the comments, thank you.

This story The Year Computers Took Over the World was first published on Daily FETCH