1. Breakfast Is the Most Important Meal of the Day

This popular decree was less about pure nutrition and more about effectively selling cereal. In the early 20th century, companies like Kellogg’s pushed the idea that a morning meal was “essential,” linking it directly to energy, academic performance, and productivity. In reality, skipping breakfast isn’t inherently detrimental to health; what truly matters is the overall quality of your diet, not the specific timing of your first meal. Today, many nutritionists recognize the benefits of time-restricted eating or intermittent fasting, which proves that the old cereal advertisements significantly oversimplified a much more complex nutritional story to move product off the shelves.

2. Drink Eight Glasses of Water a Day

The ubiquitous “8 glasses” guideline is an example of a vague idea that became an industry standard despite having no solid scientific origin. The actual amount of water a person needs varies greatly depending on climate, body size, activity level, and diet, with much of our daily fluid intake coming from the food we eat. Bottled water companies brilliantly leaned on this simple, catchy rule to sell billions of plastic bottles, turning a nuanced discussion about hydration into a booming consumer industry. Ironically, beverages like tea and coffee, along with fruits and vegetables, all contribute significantly to hydration, but a catchy, arbitrary number was much easier to sell than complex, personalized nutritional advice.

3. Carrots Improve Your Eyesight

While carrots are undeniably a healthy food, rich in vitamin A, they won’t grant you superhuman night vision. This myth traces its origin to World War II, when the British Royal Air Force claimed the remarkable accuracy of their pilots was due to a diet high in carrots. This was actually a clever propaganda ploy designed to conceal the true reason for their success: the then-secret technology of radar. The government cover story worked, and the carrot myth stuck for generations. Parents everywhere began using the hope of sharpened vision to encourage kids to eat their vegetables, turning a brilliant military misdirection into a long-standing, globally accepted “nutrition fact.”

4. A Glass of Wine a Day Is Good for You

The belief that a daily glass of wine offers significant heart protection was widely amplified following the discovery of the “French Paradox”, the observation that French people had lower rates of coronary heart disease despite a diet relatively high in saturated fat. The wine industry quickly promoted this idea in the 1990s, suggesting that resveratrol and other compounds in red wine provided a protective effect. However, the supposed benefits were wildly overstated and studies later showed that the health advantages observed were mostly linked to overall Mediterranean diet patterns and lifestyle, not the nightly Merlot. The truth is that wine is not a form of medicine, regardless of how pervasive the ad campaigns made the claim seem.

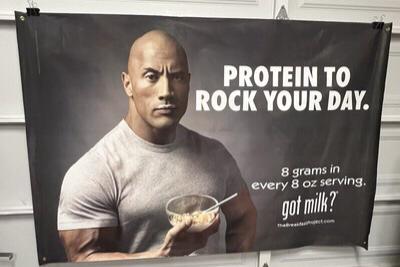

5. Milk Builds Strong Bones

The iconic “Got Milk?” campaign was a masterpiece of marketing genius that cemented milk’s status as the single, essential key to strong bones. While calcium is a crucial mineral for skeletal health, milk is not the only, nor necessarily the best, source. Research on global populations shows that many cultures consume little to no dairy and yet do not experience a greater risk of weak bones or fractures. Dairy boards spent millions framing milk as a non-negotiable dietary component, overshadowing the fact that calcium is plentiful in leafy green vegetables, nuts, beans, and fortified non-dairy alternatives. The reality is that milk is simply one option among many for maintaining bone strength.

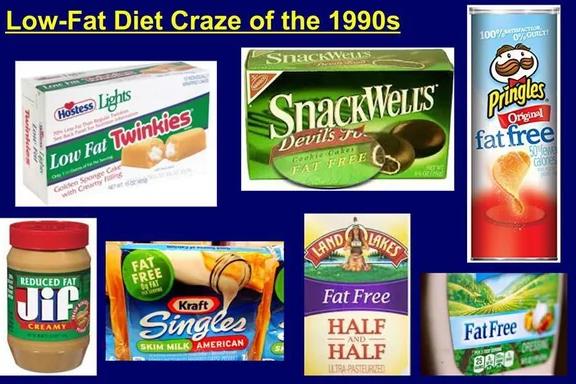

6. Low-Fat Foods Are Healthier

The obsession with “low-fat” products became a cultural and dietary phenomenon across the 1980s and 1990s, driving food companies to create fortunes from low-fat cookies, snacks, and yogurts. However, in order to compensate for the flavor lost by removing the fat, most of these products were loaded with excessive amounts of sugar, making them significantly less healthy than their full-fat counterparts. Modern nutrition science has since restored the reputation of healthy fats, highlighting that they are vital for hormone production and nutrient absorption. The low-fat craze was less about true wellness and more about appealing labels and sales figures, leaving decades of consumers to unlearn the dangerous lesson that fat was the primary dietary enemy.

7. Sugar Makes Kids Hyper

It’s a universally accepted parental truth that a sugar rush sends kids spiraling into hyperactivity, yet a large body of clinical research has consistently failed to establish a direct link between sugar consumption and genuine over-activity. The myth persists largely because children usually consume high-sugar treats at parties, holidays, or other highly stimulating social events. The true cause of the boundless energy is the excitement and novelty of the environment, not the candy itself. The food industry had no incentive to challenge this widely accepted explanation, allowing the convenient myth to become an entrenched reality in family kitchens for decades.

8. Spinach Is Packed With Iron

This persistent myth actually began with a simple, yet significant, 19th-century decimal point error that mistakenly overstated spinach’s iron content by tenfold. The iconic American cartoon character Popeye the Sailor Man later adopted spinach as his source of instant, superhuman muscle power, which cemented its reputation as a miracle, iron-dense food. While spinach is certainly healthy, providing fiber, antioxidants, and various vitamins, its iron content is not as uniquely supercharged as its reputation suggests. A basic mathematical mistake, then amplified by popular culture, snowballed into one of the biggest and most memorable food myths in the modern history of nutrition.

9. Cracking Knuckles Causes Arthritis

For generations, children were warned by parents and even some doctors that the habit of cracking knuckles would inevitably lead to painful, debilitating arthritis later in life. However, scientific studies have consistently debunked this fear. The “crack” is simply the sound of gas bubbles (primarily carbon dioxide) rapidly releasing within the synovial fluid of the joint, and the action does not damage the joint cartilage. Studies, including a famous one by a physician who cracked only one hand for decades to serve as a control, have found zero connection to increased risk of arthritis. The habit is generally harmless, though it can be annoying; the myth simply proved to be too catchy and cautionary to be easily dismissed.

10. Gum Takes Seven Years to Digest

This frightening idea was often used to terrify children into immediately spitting out any gum they inadvertently swallowed. In reality, the synthetic base of chewing gum is indigestible, but it passes harmlessly through the digestive system and is eliminated from the body just like other forms of indigestible fiber. It does not stick to the walls of the stomach or linger in the intestines for years. This pervasive myth thrived in classrooms and parenting lore because it provided a simple, memorable scare tactic. The true, simpler explanation is that many parents and teachers simply wished to discourage the habit of swallowing gum entirely.

11. Shaving Makes Hair Grow Back Thicker

It is a common belief that shaving the hair on legs, faces, or bodies will cause the regrowth to come back coarser, thicker, and darker. This is simply a long-standing optical illusion, not a biological truth. Shaving cuts the hair shaft off right at the skin’s surface, leaving a blunt, sharp edge. When this hair begins to emerge, this blunt tip makes the hair feel coarser to the touch and appear darker before the end is naturally worn down. The actual hair follicle beneath the skin is completely unaffected by the surface action of the razor. Barbers, beauticians, and companies selling shaving products had little reason to challenge the myth, as it effectively encouraged customers to maintain more frequent grooming schedules.

12. Sitting Too Close to the TV Ruins Your Eyes

This classic parental warning traces its origins back to a genuine, but now obsolete, public health concern. In the 1960s, some of the very first color televisions sold in the U.S. were found to emit higher-than-safe levels of radiation due to a manufacturing defect. Once this technology was fixed, the warning was no longer medically necessary, but the cautionary advice stubbornly remained in the parental lexicon. Today, sitting too close to any screen, including a TV, tablet, or smartphone, may cause temporary eye strain and fatigue, but it does not cause any form of permanent vision damage.

13. Eating Turkey Makes You Sleepy

The post-Thanksgiving dinner nap is almost universally blamed on the amino acid tryptophan, which is found in turkey. Tryptophan is a precursor to serotonin and melatonin, hormones that help regulate sleep. However, to feel a sedative effect, tryptophan must be consumed in relative isolation on an empty stomach. In reality, the drowsiness that sets in after a large holiday meal is caused by the combination of overeating, especially large amounts of high-glycemic carbohydrates, consuming alcohol, and finally relaxing after a stressful day of cooking and socializing. The turkey myth became a convenient cultural punchline that the poultry industry did not discourage, despite the fact that foods like cheese, eggs, and nuts contain similar levels of tryptophan without the reputation for causing food comas.

14. Cold Weather Gives You Colds

This enduring myth suggests that failing to adequately bundle up will directly lead to catching a cold or the flu. In reality, respiratory illnesses are caused by viruses, not by low environmental temperatures. The myth became widely accepted because people do get sick more often during the winter months, but this is because they spend significantly more time indoors in close proximity to others, which facilitates the transmission of airborne germs. Cold air became an easy, yet scientifically incorrect, scapegoat for the annual sniffles. Effective prevention relies on good handwashing, avoiding sick people, and ensuring better indoor ventilation, all of which are much more effective than simply wearing an extra scarf.

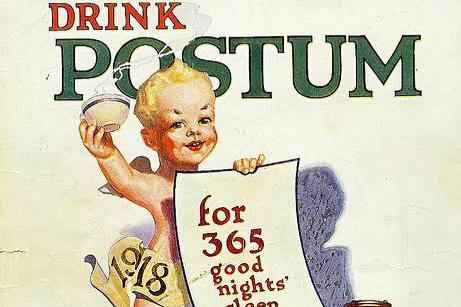

15. Coffee Stunts Your Growth

This decades-old warning, often directed at adolescents, was primarily fueled by shrewd marketing campaigns from early 20th-century companies selling “coffee alternatives,” such as the popular grain beverage Postum. These companies warned parents away from giving their children caffeine by claiming it would inhibit proper growth. While consuming large amounts of coffee is generally not recommended for children due to its effects on sleep and potential for anxiety, there is absolutely no credible scientific evidence that caffeine intake has any detrimental effect on a person’s final adult height. The myth was a piece of pure advertising spin that successfully lingered in family conversations for generations, proving that a targeted scare tactic can be highly effective.

16. MSG Is Dangerous

For decades, MSG, or monosodium glutamate, was widely and unfairly demonized as a toxic food additive capable of causing a range of negative health effects under the label of “Chinese Restaurant Syndrome.” This widespread panic was initially fueled by poorly conducted research and was amplified by sensationalized, and often xenophobic, media reporting rather than solid science. Extensive research by health organizations has since concluded that MSG is safe for consumption for the vast majority of people and is no more inherently dangerous than common table salt. The unfounded fear became so widespread that food companies capitalized by labeling their products “MSG-Free.” The ongoing scientific rehabilitation of MSG shows how prejudice and sensationalism can profoundly shape a public health panic.

17. Reading in Dim Light Ruins Eyesight

It was a common and frustrating parental insistence that children avoid reading under blankets, with flashlights, or in otherwise low-light settings. The warning was that this habit would permanently damage their eyesight. While reading in dim light can certainly cause temporary eye strain, fatigue, and headaches as the muscles of the eye work harder to focus, there is no clinical evidence to suggest that it causes any form of permanent visual damage. The myth was often a convenient way for parents to enforce a firm bedtime, but the idea persisted long after the context was forgotten. Today, the same concern is often voiced about looking at smartphone and tablet screens in the dark, but the scientific conclusion remains the same: the effect is temporary discomfort, not lasting harm.

18. Wet Hair Makes You Sick

This is another classic parental caution: going outside with wet hair will instantly cause you to catch a cold or other illness. The truth is that illnesses like the common cold and the flu are exclusively caused by viruses, not by external temperature or the dampness of your scalp. This myth likely became so ingrained because illnesses are more prevalent during the colder months when people are more likely to have wet hair upon leaving the house. Companies that sold hats and scarves for warmth were happy to let this idea persist. The real risk of going out with wet hair is simple physical discomfort, proving how simple warnings from worried parents often become accepted, yet unfounded, medical dogma.

19. Eating at Night Makes You Gain More Weight

The widely spread idea that calories consumed after a specific time, like 8 p.m., are somehow more prone to being stored as fat is a myth. The human body’s metabolism doesn’t possess an internal clock that suddenly converts food into fat based on the hour. The crucial factor that determines weight gain or loss is your total caloric intake over the entire 24-hour period relative to your total energy expenditure. This myth was partly driven by the diet industry to create simple, marketable rules like “no late-night snacks.” In reality, a balanced, healthy evening snack consumed within your daily calorie goals is no more or less fattening than the exact same food eaten at lunchtime.

20. The Five-Second Rule

When it comes to food safety myths, few are as widespread and stubbornly believed as the “Five-Second Rule,” which claims that food dropped on the floor is safe to eat as long as you retrieve it within five seconds. Scientific studies have repeatedly and conclusively proven this rule to be false. The transfer of bacteria from surfaces to food is virtually instantaneous, often occurring in less than a single second. The rule was likely born as an attempt at playful reassurance among friends, but it spread everywhere and even became a quirky way for certain companies to advertise “tough stomachs.” Research shows that wet foods, in particular, collect bacteria immediately, regardless of the speed of retrieval.

21. Wait 30 Minutes After Eating Before Swimming

Countless parents have insisted that jumping into the pool or ocean immediately after eating a meal will lead to debilitating cramps and, potentially, drowning. There is absolutely no clinical or scientific evidence to support this claim. At worst, a very heavy meal might divert some blood flow to the stomach for digestion, which could theoretically make a person feel slightly more sluggish or less comfortable while exercising vigorously. However, this is not a medical risk. This rule was likely created as a simple, effective behavioral control mechanism to keep excited children resting or contained for a period after lunch at summer camps and public pools. Despite its lack of medical basis, it became an unbreakable gospel for a generation of swimmers.

22. Eggs Raise Cholesterol Too Much

For decades, eggs were unfairly targeted and demonized in health campaigns, largely because of their high cholesterol content. They were branded in the 1980s and 90s as a dangerous food to be avoided. However, subsequent and more nuanced research has clearly demonstrated that for the vast majority of people, dietary cholesterol has a much smaller impact on blood cholesterol levels than was previously assumed. Eggs are actually a nutrient-dense food rich in high-quality protein, vitamins, and beneficial nutrients. The poor “bad egg” reputation stemmed from overly simplistic and alarmist health messages rather than comprehensive evidence. Nutritionists now widely agree that eggs can and should be a part of a balanced and heart-healthy diet.

23. Low-Carb Diets Work for Everyone

The rise of the Atkins diet and other low-carbohydrate plans convinced millions of people that staple foods like bread, rice, and pasta were the primary enemies of weight loss and health. While significantly cutting carbohydrate intake can be an effective weight-loss strategy for some individuals, particularly those with insulin resistance, it is not a universally effective or sustainable solution for everyone. Food manufacturers quickly rushed to create and label thousands of products as “low-carb,” capitalizing on what became a multi-billion-dollar fad that often drastically oversold its long-term promise. As with all restrictive eating plans, success ultimately depends on the diet’s long-term sustainability and the total calorie deficit created, rather than a single, catchy rule about avoiding one macronutrient.

24. Multivitamins Guarantee Better Health

The supplement industry thrives on the powerful promise that taking a single, comprehensive pill can effortlessly fill any and all potential nutritional gaps in a person’s diet. The truth is that for people who already eat a varied and reasonably balanced diet, taking a daily multivitamin pill provides little to no measurable health benefit. They are genuinely helpful in cases of clinically diagnosed deficiency or for people with very restricted diets, but they are not a magical shield against illness or chronic disease. Decades of expensive advertising successfully blurred the line between “sometimes helpful” and “daily essential,” ensuring that the supplement business remains massive despite the narrow benefits their products truly deliver for most consumers.

25. Wine, Beer, and Liquor Warm You Up in Winter

Generations of people in cold climates have believed that consuming alcohol, from a shot of liquor to a warm beer, is an effective way to “warm up” in the cold. In reality, the feeling of warmth is dangerously misleading. Alcohol is a vasodilator, meaning it causes blood vessels near the skin’s surface to widen. This rush of blood to the extremities creates a subjective feeling of being warm, but it simultaneously pulls heat away from the body’s core, causing the core temperature to drop. Liquor and beer companies have long leaned into the cozy, comforting marketing, but the scientific truth is that consuming alcohol in cold weather can actually increase a person’s risk of dangerous hypothermia.

Which of these myths surprised you the most, or which one did you believe until just now?

This story 25 Health Myths We All Fell For, And the Marketing Lies Behind Them was first published on Daily FETCH