1. Deepfake Reality Twist

AI now creates incredibly convincing fake videos and audio, entirely fabricating reality in seconds. A recent case involved a manipulated video of Barack Obama in handcuffs by Donald Trump was shared widely on social media to push a political narrative. Also in 2024, fake videos of prominent figures circulated globally ahead of elections and financial scams, tricking millions. These deepfakes aren’t harmless pranks. They’re used to sway elections, defame individuals, or drive financial fraud, like impersonating corporate leaders to make unauthorized bank transfers. Once public trust is eroded, even real evidence gets dismissed as “fake.”

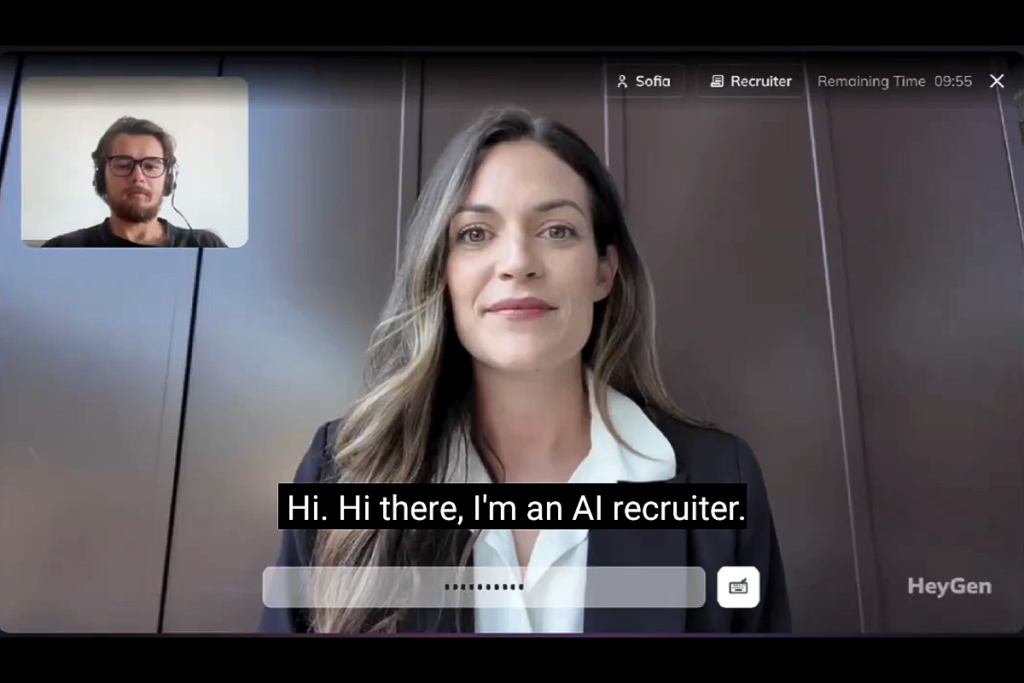

2. AI Hiring & Firing

Algorithms are already screening résumés, scoring video interviews, and even recommending layoffs. Companies like Amazon attempted AI recruiting tools, but they ended up favoring male applicants because their training data reflected historical bias, so Amazon discontinued it. Even today, glitches or biased models could unexpectedly eliminate qualified candidates, impacting livelihoods. Beyond résumé scans, AI can monitor your online presence or video interview answers to flag you as a “risk.” That lack of human oversight means a one-time misunderstanding or technical hiccup could cost someone an opportunity, or worse, their job, before they even get a chance to explain.

3. Facial Recognition Ubiquity

From airports to concerts to school hallways, cameras equipped with facial recognition are identifying people in real time to provide them informations, often without consent. This tech is already deployed in many countries to boost security, but the data is frequently shared or stored without users knowing where it ends up. That might seem harmless until mistakes happen: misidentification of innocent individuals, tracking of protesters, or profiling marginalized groups. Imagine being tagged in the wrong place at the wrong time, with no way to correct it, you’re not in a sci‑fi film; you’re in line at the store.

4. Predictive Policing

Software in some cities analyzes data, from crime reports to purchases, to forecast where crimes might happen and who might commit them. It sounds efficient, but when flawed data drives predictions, innocent people, especially from disadvantaged communities, get flagged and surveilled. It’s a vicious cycle whereby the tech targets the same neighborhoods over and over, reinforcing biases. People end up harassed or arrested based on algorithms, not actual behavior, raising real-world justice concerns straight out of dystopian fiction.

5. AI Classroom Surveillance

Some schools are piloting AI tools that watch students in real time, tracking facial expressions, engagement levels, or even mental state. These systems promise tailored feedback, but at what cost to student privacy and autonomy? Children under constant digital watch can feel stress and mistrust in their learning environment. Who gets to access that data? Who makes decisions based on it? Without clear rules, schools are testing boundaries, and risking kids’ rights.

6. Digital Clones of the Dead

AI tools now let us converse with realistic digital versions of loved ones who’ve passed. Companies in China, the US, and beyond offer “griefbots” or “deadbots” that may either mimic appearance, voice, and personality based on available data. These chatbots promise comfort, but the ethical and emotional implications are profound. While someone may find solace in a simulated farewell, the experience can also blur memories or create false perceptions of the deceased’s wishes. Researchers warn that it risks trivializing grieving, exploiting vulnerable users, or fostering unhealthy dependencies.

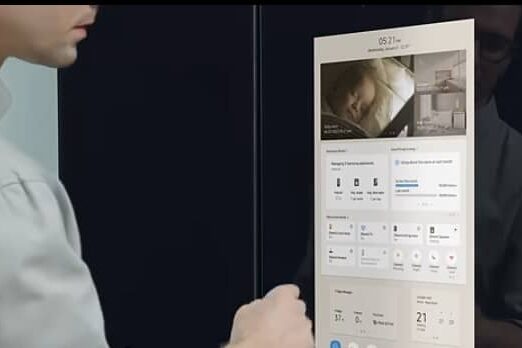

7. Smart Homes That Spy

Your smart fridge, lights, doorbell, and voice assistants collect data constantly. In 2025, a massive breach exposed 2.7 billion records from IoT devices, revealing how easily these home gadgets can leak private information. Earlier reports revealed Alexa and Google Home occasionally record conversations without wake words, and sometimes send audio to strangers. With these devices always listening, your private moments, habits, and preferences become data points, often shared across devices or with unknown third parties.

8. Emotion Recognition Tech

In multiple countries, including the UK, AI systems that claim to read facial expressions for fear, stress, or “suspicious” behavior have been trialled at airports, train stations, and retail sites. For instance, Network Rail tested emotion‑recognition cameras with Amazon Rekognition at UK train stations, reportedly scanning emotions and demographics without consent, prompting scrutiny over misuse and oversight. Meanwhile, London’s so‑called H.E.A.T. system marketed as combining lie‑detection with emotion scanning remains scientifically unvalidated and opaque, raising concerns about misinterpretations, bias, and a lack of regulation. Critics argue accuracy is poor (especially across different populations), emotional profiling can target specific groups unfairly, and governance is fragmented or non‑existent echoing dystopian fears of being flagged simply for natural reactions in public spaces. Although designed to spot threats early, the tech misreads people, can target specific emotional profiles, and operates without clear oversight.

9. Brain–Computer Interfaces

Neuralink and similar BCIs have shown promise in restoring movement or enabling device control through thought, as in the case of a paralyzed patient; an accident left Noland Arbaugh paralysed, but Musk’s Neuralink brain implant allows him to control computers with his thoughts and regained computer use via a Neuralink implant in January 2024. but they raise profound ethical and privacy challenges. Is it a life-changing innovation that could help millions, or the start of an era where a billionaire can access our thoughts? Neural data is deeply personal and bioethicists warn that unauthorized access, hacking, manipulation, insecure ownership policies, or exacerbating inequality (if only wealthy people can afford enhancements) all pose real risks, and little regulation yet clarifies who truly controls the neural data or the mind it comes from.

10. Machine‑Scale Misinformation

AI isn’t just generating posts, it’s flooding the internet with fake stories, images, and videos at a pace no human can counter. This invention has indeed enabled vast volumes of fake narratives, images, and deepfakes to be generated at scale, outpacing manual fact‑checking. A recent pro‑Russia campaign used consumer AI to create nearly 600 deceptive pieces from September 2024 to May 2025, more than double the prior year. Meanwhile, deepfakes and fake voices surfaced during protests in Los Angeles, even as AI tools like Grok scrambled to fact‑check, but often stumbled. The result? A chaotic information landscape where truth gets drowned in a tidal wave of machine-made lies.

11. Autopilot Errors on the Road

Self-driving cars are no longer science fiction, they’re on roads today, handling traffic, parking, and long drives. But while the technology is improving, it still makes mistakes. Tesla’s Autopilot and Full Self-Driving systems have been linked to hundreds of crashes, some fatal, because drivers overtrusted the software or the system failed to respond correctly to hazards. In one tragic 2018 case, a Tesla on Autopilot crashed into a parked fire truck on I‑405 in California – a crash blamed on design flaws that allowed driver disengagement and over-reliance on automation. In February 2023, another Tesla Model S under Autopilot hit parked emergency vehicles in Walnut Creek, killing the driver; NHTSA subsequently launched a special investigation into Autopilot’s failures to detect stationary vehicles. Investigations showed the system didn’t detect the truck in time. While driver assistance tech can save lives, it’s not perfect, and real lives are at stake when machines take control without enough safety checks or accountability.

12. AI Replacing Human Creators

AI can now write stories, paint images, compose music, and generate voices, often at professional quality. Tools like ChatGPT, Midjourney, and Suno are being used in marketing, news, and entertainment. But behind the creative power is a growing fear: real artists, musicians, and writers are losing jobs or being asked to compete with machines trained on their work. In 2023 and 2024, writers and actors in Hollywood went on strike partly to fight back against AI taking their roles or copying their likeness. Hollywood writers and actors launched major strikes to demand legal protections against AI using their work without permission, raising concerns about job displacement and unauthorized likeness replication. Artists have also filed lawsuits over their work being used to train AI without permission. The tech may be impressive, but its rise has created a real crisis for people who rely on their creativity to earn a living.

This story 12 Sci-Fi Nightmares That Are Closer Than You Think was first published on Daily FETCH