1. Meta Is a Single Company Running Multiple Global Platforms

Meta is the parent company behind Facebook, Instagram, and WhatsApp, all operated under Meta Platforms. While these apps feel separate to users, they share infrastructure, leadership, and long-term strategy. Meta rebranded from Facebook Inc. in 2021 to reflect its broader focus beyond social networking, including virtual reality, artificial intelligence, and immersive digital environments. Internally, engineering teams often work across platforms, meaning features developed for one app can influence another. This structure allows Meta to scale rapidly and deploy updates globally, but it also means policy decisions or technical changes can ripple across billions of accounts at once. Despite branding differences, Meta views its apps as parts of one ecosystem designed to keep users connected throughout their digital lives.

2. Your Feed Is Ranked by Thousands of Signals

What you see on Facebook or Instagram is not random or purely chronological. Meta’s ranking systems evaluate thousands of signals to decide which posts appear first. These signals include who you interact with most, how long you spend on certain posts, whether you comment or share similar content, and even how quickly you scroll past something. The goal is to predict what you are most likely to find meaningful or engaging. Meta has publicly stated that these systems rely heavily on machine learning models trained on user behavior patterns. While users often blame “the algorithm,” it is essentially a constantly updating prediction system that adjusts based on your actions, often changing what you see within minutes or hours of new activity.

3. Human Reviewers Still Play a Major Role in Content Decisions

Although automation handles massive volumes of content, Meta still employs tens of thousands of human reviewers worldwide. These reviewers, many working through partner firms, help enforce community standards by examining posts flagged by users or AI systems. They review content involving hate speech, misinformation, violence, and other sensitive issues. Meta publishes transparency reports showing that humans remain essential for context-heavy decisions that algorithms struggle with. Reviewers follow detailed rulebooks that are updated frequently, sometimes weekly, to reflect policy changes. This human layer is one reason moderation outcomes can feel inconsistent, as decisions depend on interpretation, cultural context, and evolving guidelines rather than fixed rules alone.

4. WhatsApp Messages Are End-to-End Encrypted by Default

WhatsApp uses end-to-end encryption for personal messages, meaning only the sender and recipient can read the content. Even Meta itself cannot see message text, photos, or voice notes in transit. This system was implemented globally in 2016 using the Signal Protocol, a widely respected encryption standard. While Meta can access limited metadata, such as phone numbers and timestamps, the actual message content remains private. This design has made WhatsApp popular for secure communication but has also created challenges for law enforcement and content moderation. Meta has repeatedly stated that weakening encryption would compromise user safety, highlighting the company’s complex balance between privacy, regulation, and public pressure.

5. Instagram Tests Features on Small Groups First

Before most new Instagram features roll out globally, Meta quietly tests them on small user groups. This process, known as A/B testing, allows engineers to compare different versions of a feature and measure user response. For example, experiments with hiding like counts or changing feed layouts were first tested in select countries or user segments. Meta analyzes metrics such as time spent, posting frequency, and user satisfaction before deciding whether to expand or abandon a feature. Many ideas never reach the wider public because they fail these tests. This experimental culture helps Meta refine products quickly, but it also means users are often part of live experiments without explicit notification.

6. Meta Tracks Behavior Across Its Apps

Meta’s platforms are designed to share certain data across Facebook, Instagram, and WhatsApp to improve functionality and advertising. For example, interactions on Instagram can influence the ads you see on Facebook. While WhatsApp message content is encrypted, account information such as phone numbers can still support cross-platform features. Meta explains that this integration helps reduce spam, personalize experiences, and maintain security. Regulators in multiple regions have scrutinized this practice, leading to policy updates and user controls. Despite these changes, Meta’s ability to connect activity across apps remains a core part of how it understands user behavior at scale.

7. Advertising Is the Core Revenue Engine

Nearly all of Meta’s revenue comes from advertising. Businesses pay to show ads targeted by demographics, interests, location, and online behavior. Meta does not sell personal data directly; instead, advertisers choose audiences, and Meta’s systems place ads accordingly. The effectiveness of this model depends on vast amounts of user interaction data, which helps predict what ads users are likely to engage with. Even small actions, like pausing on a video, can influence ad targeting. This ad-driven structure explains why Meta prioritizes engagement and time spent, as more attention creates more opportunities for advertisers to reach potential customers.

8. Facebook Groups Are Moderated Mostly by Volunteers

Most Facebook Groups rely on volunteer administrators and moderators rather than Meta staff. These individuals set group rules, approve posts, and remove members when necessary. Meta provides tools such as keyword alerts and moderation queues, but enforcement largely depends on unpaid community members. This decentralized model allows millions of groups to function independently, from local communities to global interest networks. However, it also means moderation quality varies widely. Meta steps in only when content violates platform-wide policies or is reported at scale, making group admins the first and often most influential line of control within these digital communities.

9. Meta Uses AI to Detect Harmful Content Before Reports

Meta’s artificial intelligence systems proactively scan posts, images, and videos to detect potential policy violations. According to Meta’s transparency reports, a significant percentage of harmful content is removed before any user reports it. AI models are trained on large datasets to recognize patterns linked to spam, nudity, hate speech, and violence. While these systems are fast, they are not perfect and can misclassify content, leading to appeals and reversals. Meta continues to invest heavily in AI research to improve accuracy, viewing automation as the only way to moderate content at the scale of billions of daily posts.

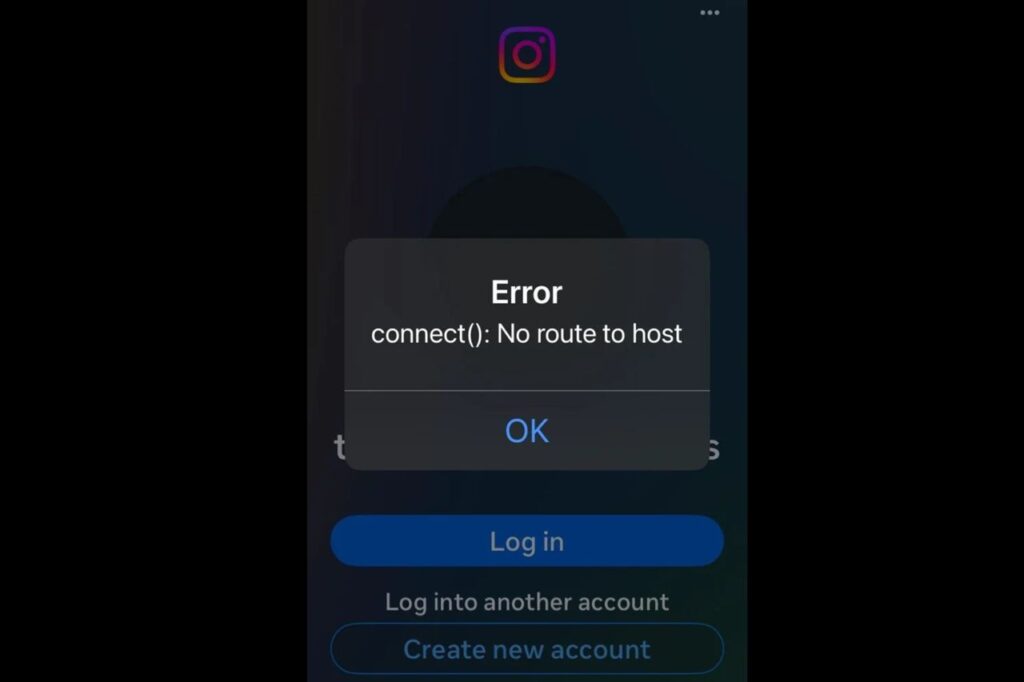

10. Major Outages Are Usually Internal, Not External Attacks

When Facebook, Instagram, and WhatsApp go down simultaneously, the cause is often internal configuration errors rather than cyberattacks. Meta has publicly explained past global outages as the result of networking changes that disrupted communication between data centers. Because all platforms share core infrastructure, a single technical issue can affect billions of users worldwide. These incidents highlight how tightly integrated Meta’s systems are. Engineers typically restore service within hours, but the disruptions reveal the risks of centralized digital infrastructure and the massive responsibility involved in keeping global communication tools running smoothly.

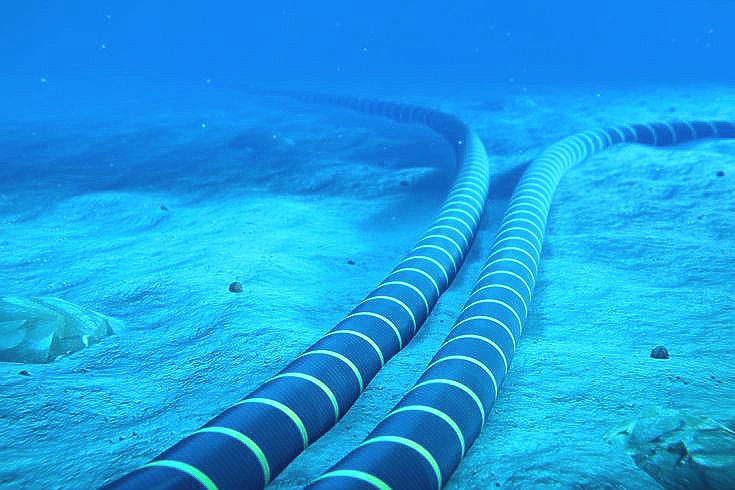

11. Meta Builds Massive Private Network

Meta doesn’t just rent internet capacity, it builds it. Over recent years the company has invested heavily in its own global network: data centers, regional fiber, and even multi-billion-dollar subsea cable projects designed to carry Meta traffic directly between continents. These private links reduce latency for services, give Meta greater control over capacity during peak events, and support bandwidth-hungry features like video and AI. Because Meta is increasingly the sole user/owner on some routes, outages or upgrades to this infrastructure can affect many services at once, but the payoff is faster, cheaper transport of enormous volumes of data across the globe.

12. (Short Video) Is the Engine of Instagram’s Growth

Meta shifted Instagram’s product strategy toward short, watchable video (Reels) after user time-spent and engagement metrics showed people prefer video. Reels now drives a major portion of Instagram’s ad revenue growth and shapes what creators post; the platform tunes feeds and recommendations to favor short videos that keep viewers watching. This strategic move explains many UX choices, autoplay, vertical format, and creator monetization tools, and clarifies why Instagram’s layout and algorithmic priorities have shifted so visibly in recent years. Advertisers follow attention, so Reels’ success has directly influenced Meta’s ad products and measurement systems.

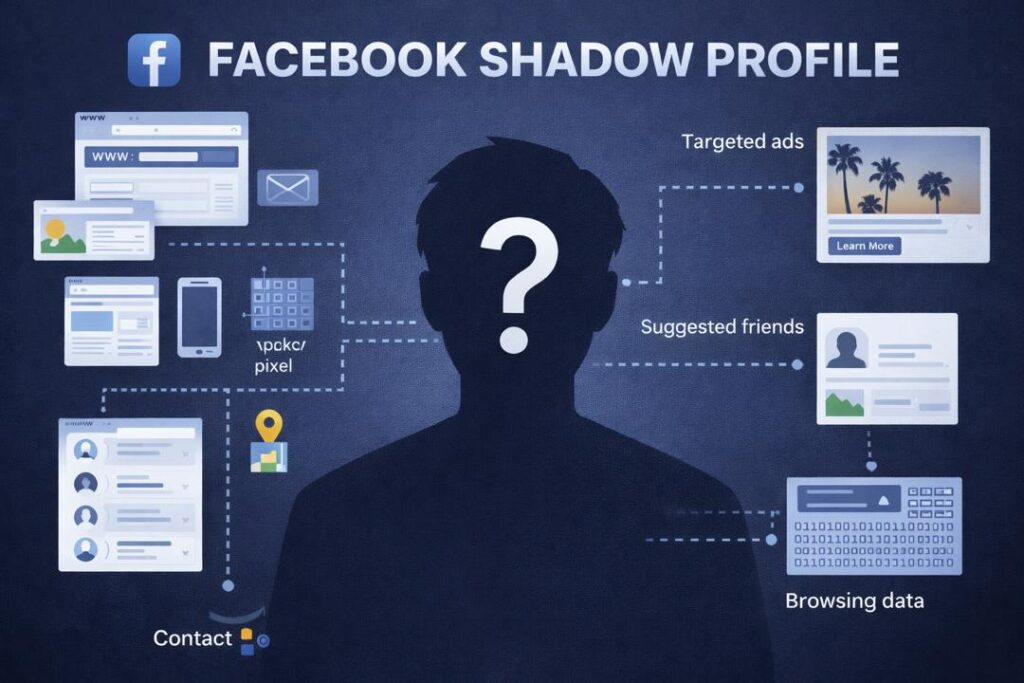

13. “Shadow Profiles”: Tracking Beyond an App Account

Researchers and privacy reports have shown that Meta can assemble profile data even for people who don’t have active Facebook accounts, using tracking pixels, social plugins, and login/analytics tools found across millions of websites. These so-called “shadow profiles” compile browsing signals, contact imports, and cross-site activity to infer interests and contacts, information then usable for ad targeting or friend suggestions. The degree of off-platform tracking has been repeatedly documented in academic studies and regulatory filings, and it’s a major reason why privacy advocates and regulators push for stricter cookie and tracking rules.

When users know how their data is used, how content is moderated, and why certain features exist, they can make more informed choices about how they engage online. In a world where social platforms shape conversations, culture, and commerce, a little transparency goes a long way.